Amidst the rise of fake news and disinformation, the Australian government is leveraging the capabilities of artificial intelligence (AI). This decision is in line with a worldwide trend where governments and organisations are using AI technologies to protect the accuracy of information in the digital age. Advanced AI technologies, including machine learning and natural language processing, empower the swift examination of extensive data sets to detect and highlight inaccurate information. This decision is in line with a worldwide initiative to utilise AI to uphold the accuracy of information.

AI tools tackling misinformation

AI technologies, such as machine learning algorithms and natural language processing, are instrumental in detecting and minimising the effects of disinformation. These tools have the capacity to analyse large volumes of data in order to identify patterns and irregularities that may suggest the presence of inaccurate information. As per the findings of the Carnegie Endowment for International Peace, artificial intelligence has the ability to quickly analyse social media content and identify potential instances of disinformation.

This feature allows for quicker response times, stopping the dissemination of false information before it can inflict substantial damage. The Australian government is currently investigating AI regulations to tackle the issue of misusing AI to spread disinformation. Furthermore, tech companies from around the world have come together to create a Global Tech Accord aimed at setting ethical standards for the use of AI, particularly in the context of elections.

Additionally, AI plays a crucial role in combating disinformation by improving digital literacy and promoting awareness. Cutting-edge educational tools and platforms powered by AI assist the public in developing the skills to recognise and combat misinformation. These tools help users gauge the reliability of information instantaneously, encouraging a more knowledgeable and discerning audience.

Australian government AI regulations

The Australian government has taken strong steps to regulate artificial intelligence (AI) and combat misinformation. The Australian Communications and Media Authority (ACMA) has implemented a Code of Practice addressing the issue of disinformation and misinformation. This code requires digital platforms to follow specific guidelines in order to combat the dissemination of false information. Furthermore, the government is considering additional regulations to address the issue of AI-generated deepfakes and other advanced forms of misinformation.

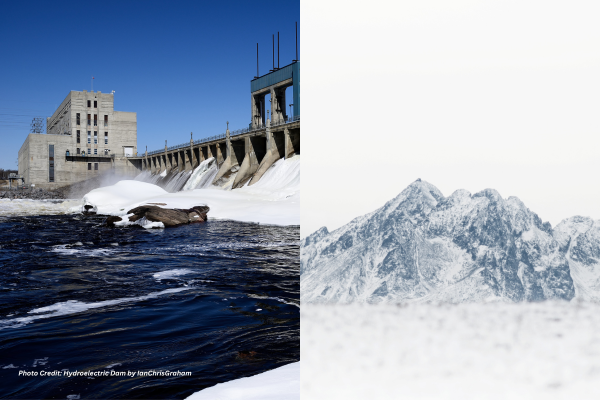

Deepfakes, advanced AI technologies that manipulate or fabricate audio and video content to depict events or statements that never occurred, present a substantial challenge to the integrity of news dissemination. Furthermore, the government is exploring the potential of AI in various sectors while taking steps to mitigate its risks. We are currently working on the establishment of a national data centre that will have a dependable and strong data infrastructure, along with an efficient data management system. The center strives to provide reliable and cost-effective internet access, which is critical for successful AI implementation and oversight.

International efforts against AI disinformation

Global governments are coming together to tackle the issues posed by artificial intelligence-fueled disinformation. One notable effort in this area is the Global Tech Accord. This agreement, supported by multiple nations, seeks to address the misleading utilisation of AI, particularly in relation to elections. International organisations are also playing a crucial role, alongside governmental efforts. For instance, the World Economic Forum (WEF) is actively developing frameworks for AI governance. These frameworks aim to encourage the responsible application of AI technologies. Furthermore, the annual AI for Good Summit, the primary UN platform for promoting AI technology, underscores the significance of establishing standards to tackle the problem of misinformation and deepfakes.

The summit gathers a diverse range of individuals, including academics, industry representatives, top-level executives, and leading experts in the field, alongside 47 partners from the UN system. The global landscape and cooperation on AI and misinformation require a collective endeavour from governments, international organisations, and diverse stakeholders. These efforts seek to responsibly leverage the power of AI while addressing the dangers of misinformation.

AI challenges and risks

AI technologies, although full of potential, present substantial challenges and risks. One of the main issues is the occurrence of false positives, where AI systems mistakenly identify genuine content as fake. This could erode confidence in AI systems and result in the unwarranted censorship of legitimate information. The fast-paced development of AI technologies poses yet another obstacle. Regulatory frameworks need to constantly evolve to address emerging threats and maintain their effectiveness in a rapidly changing environment.

However, accomplishing this task can be challenging considering the intricate nature and rapid progress of AI technology. Excessive regulations also carry a certain level of risk. Although regulations are important for addressing the potential misuse of AI, it is crucial to strike a balance to avoid hindering innovation and restricting the positive applications of AI. Finding the perfect equilibrium between regulation and innovation requires careful navigation.

Furthermore, the potential for AI to contribute to the dissemination of false information is a major concern. AI has the potential to reduce the costs and labor required to create and disseminate false information. This can have serious consequences, such as destabilising societies, disrupting electoral processes, and undermining trust in media and government sources. Thus, it is essential to create strong strategies to mitigate these risks.

Future of government AI initiatives

The Australian government is making significant investments to strengthen its AI capabilities and improve regulatory measures. This entails conducting public consultations to gather input on striking a balance between AI innovation and risk mitigation. The objective is to establish a strong regulatory framework that safeguards against misinformation while encouraging the beneficial uses of AI. There is growing concern that AI, particularly machine learning, will greatly enhance disinformation campaigns. These operations involve covert efforts to intentionally spread false or misleading information.

On the other hand, AI can serve as a valuable asset in the fight against disinformation. Cutting-edge AI systems have the ability to analyse patterns, language use, and context to assist in content moderation, fact-checking, and identifying false information. According to the World Economic Forum, there are significant risks posed by misinformation and disinformation in the years to come. Deepfakes and AI-generated content continue to proliferate, making it increasingly challenging for voters to distinguish between what is true and what is false, highlighting the potential dangers of using AI for political purposes. This has the potential to impact voter behaviour and undermine the democratic process.

The Australian government’s proactive stance on harnessing AI to combat misinformation is a vital step in safeguarding the accuracy and reliability of information. Through the implementation of strict regulations, fostering global collaboration, and encouraging ongoing innovation, Australia is well-equipped to address the challenges posed by AI-driven disinformation while also leveraging the positive impacts this technology can bring to society. The government’s dedication to improving AI capabilities and implementing regulations demonstrates its understanding of the significant impact AI can have, as well as its resolve to ensure that this impact is positive for society.

The emphasis on public consultations highlights the government’s dedication to inclusivity and its understanding of the significance of diverse perspectives in shaping the future of AI. In the future, the government’s AI-driven solutions to combat fake news are expected to adapt and improve as technology advances. As AI technologies advance, the strategies for using them to combat disinformation will also evolve.

Justin Lavadia is a content producer and editor at Public Spectrum with a diverse writing background spanning various niches and formats. With a wealth of experience, he brings clarity and concise communication to digital content. His expertise lies in crafting engaging content and delivering impactful narratives that resonate with readers.